A/B testing is the method of choice when optimising a range of digital material but do you know how to do it correctly? Many marketers are guilty of doing it wrong or not doing it all. Whether it’s for your landing page, display adverts or even your print ads. A/B offers data you cannot ignore for design optimisation and increased return on investment (ROI).

Often it can seem as if the process is overcomplicated and detracts from the real business of marketing. And yes, if you’re still using manual processes then watching paint dry would be a better use of your time (probably more amusing too). But, with creative management platforms (CMP) and this guide to A/B testing, the practice becomes both useful and interesting.

So what is A/B testing?

It should be simple, but that’s where you’re wrong. Today there are a multitude of ways to test variations of design. A/B testing, A/B/n testing, multivariate test, multi-armed bandit testing, multi-page funnel testing, quantum chromodynamics, you get the point. It’s enough to make you want to give up before you’ve even begun.

But never fear, there is hope! Standard A/B testing is still an effective and incredibly useful tool for optimising your campaign.

A/B testing can be simple; you can test just one variant on your advert or landing page. Then you run the test until you reach a conclusion on which is the better version, simple. Then begin again with another aspect. It’s as easy as testing chocolate vs. vanilla ice cream, apple vs. rhubarb crumble, or köttbullar vs. prinskorvar (we’re not sure about that last one).

What should you be testing?

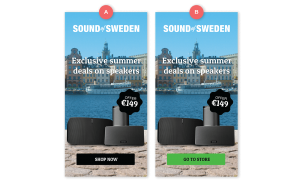

There’s a seemingly endless list of things you can vary with A/B testing. But there are some key features that when optimised, can really impact your click through rate (CTR). Below we’ve created a list of all the most useful aspects.

Headline –

Consider the length of your headline, keep it short and sweet. What tone of voice are you using in your headline? Are you looking to convey a sense of urgency? Or a positive or negative tone? You can also play around with the colour, contrast, font-size, and location on the page.

Image –

Is your background image black/white or colour, does it feature people or products, do you have one image or several images? All things to think about when optimising your image.

Call to action (CTA) –

Arguably the most important aspect. You can test the colour of the button, the contrast, the language, and the style of the button itself. You could even test the need for a button at all.

Copy –

See what resonates more with your customers. Long-form or short-form. Just make sure you’re explaining the features and benefits concisely.

Landing page forms –

You can test the length of your form, the number of fields and the design itself. Just remember to balance your desire for data with what you’re offering.

A/B test your publishing strategy

You may have the optimal design for your advert but don’t forget to test your publishing strategy. A/B test your networks. As our Systems Owner, Travis Isaacson, states ‘you need to make sure your network is providing you with the results that you require for your adverts’. Each network has their own publishers and as such, different audiences. Although it may be comfortable to stick with the network you know, in order to get the most relevant traffic you should vary your network as another form of optimisation.

If you’ve made the time to create the best imagery and text then it makes sense to get the highest quality traffic for your advert. You can A/B test optimum audiences, segments, and times of day for your online ads in order to improve ROI.

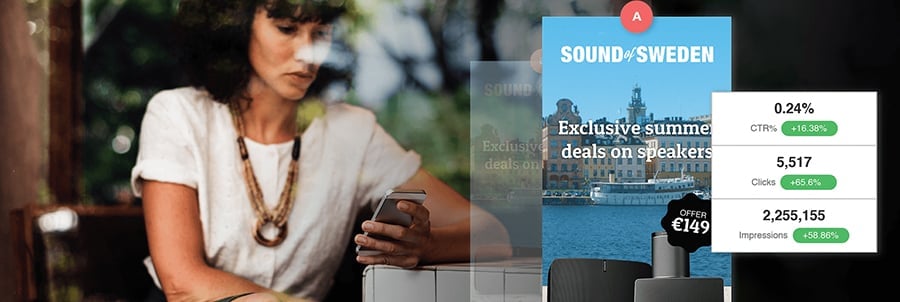

This may all seem a bit overwhelming but the technology is out there to make the process simpler. With Bannerflow, our tag system is agnostic. Which means that you can test your ads on different networks, different variants, segments, and directly publish to them with ease.

How data can help you reach a hypothesis

Using these identifying factors, and after collecting relevant data, it’s time to create a working hypothesis for your A/B test.

A great way to do this is through heatmaps. You can use heatmaps as a real-time analytics tool to identify problem areas. Heatmaps help designers to see which areas viewers are attracted to and which are off-putting or distracting them. They add another layer to your A/B test.

On-page surveys and visitor recordings are another way to identify where they’re not converting.

From here you can theorise as to why. Once you have a better idea of where the issues on your page or advert are, you can test different variations using the suggestions above. When you have your hypothesis, the real fun of A/B testing begins.

‘Theory, Test, Result, Repeat’: the anthem to A/B testing

Theory –

A/B testing is a process. It’s a simple one too. With a working hypothesis, you can choose how you want to weight each variation and begin the test process. The standard 50/50 split works for all new views.

Test –

The length of your A/B test depends on a number of factors. Different ad networks have different traffic levels. Consider also expected conversion rate changes. If you already have high conversion rates then you will need less time to reach statistical confidence. There are tools to automatically calculate this. Once you have reached statistical significance, analyse the results.

Standard practice for A/B testing statistical confidence in your data needs to be at least 95%. Although it depends on what variant you’re testing. The more significant the change, the less scientific you need be process-wise. The more specific changes, e.g. micro copy, require more data to prove their positive or negative impact. Whereas entirely new designs or drastic changes are far easier to assess in terms of conversion.

Result –

It’s entirely likely that your A/B test will come back as inconclusive. In that case you simply need to go back to your hypothesis and begin again with a new idea. Stick with it. Changing a single word in your CTA can increase your CTR by as much as 161%.

Repeat –

When you do reach a decisive conclusion have the champion variation take 100% of the traffic. After deciding your next hypothesis the process can begin again. This can seem like an overwhelming task, especially if you’re doing this all manually. But if you’re working with CMPs, then design adjustments, scheduling, and analytics can all be done in minutes; a comforting thought in the age of agile advertising.

‘Shoulda, Woulda, Coulda’: The dos and don’ts of A/B testing

There are a few practices that you should get into the habit of when you begin A/B testing. And there are some that you should actively avoid. For example:

Do:

Always run variants simultaneously. Traffic can differ wildly from week to week. If you were to test one landing page one week and another a different week then you risk inaccurate data.

Have statistical confidence. Make sure you use a tool or online calculator to measure the statistical confidence in your data. If you conclude your test too early you may make the wrong decision.

Repeat the process. Never test just test one variant from the HBICC list. Once you’ve concluded one test, there are plenty of other factors you can you optimise.

Don’t:

Test more than variant at once. If you attempt to optimise email campaigns and landing pages simultaneously you won’t have a clue why your conversion rates changed.

Run your test for too long. Running your test even after you have reached statistical significance makes it more vulnerable to external factors. Calendar events can cause unnatural spikes in traffic and behaviour.

Ignore the nature of your leads. Make sure your test is in line with your business goals. It may be satisfying to see those conversion numbers rise, but if they’re the wrong customers then those conversions are meaningless.

DCOs and the future of A/B testing

AI DCOs don’t just offer exciting prospects for creative, but also for testing. Another top tip from Travis: ‘using an AI DCO solution to A/B test offers the opportunity to automate optimisation. You already have the data from your customer base in your DMP/network, you should be putting it to work.’

AI DCO uses feeded content and chooses which singular items to test per impression. This technology enhances existing ads using multiple predetermined variants so you don’t have to. The process is evolving from manual and arduous processes to the next level. Much like banner advertising…

Conclusion

There you have it. A simple and straightforward guide to A/B testing. After all, it’s not theoretical physics.

The process doesn’t have to be laborious if you have the right tools to hand. In fact, it’s a matter of having a strong hypothesis and testing until you have the data to prove your changes have made a positive impact to CTR. After that, you can work through every aspect until you have the most effective adverts out there.

If you would like to know more about how our creative management platform can help you with A/B testing get in contact with us.